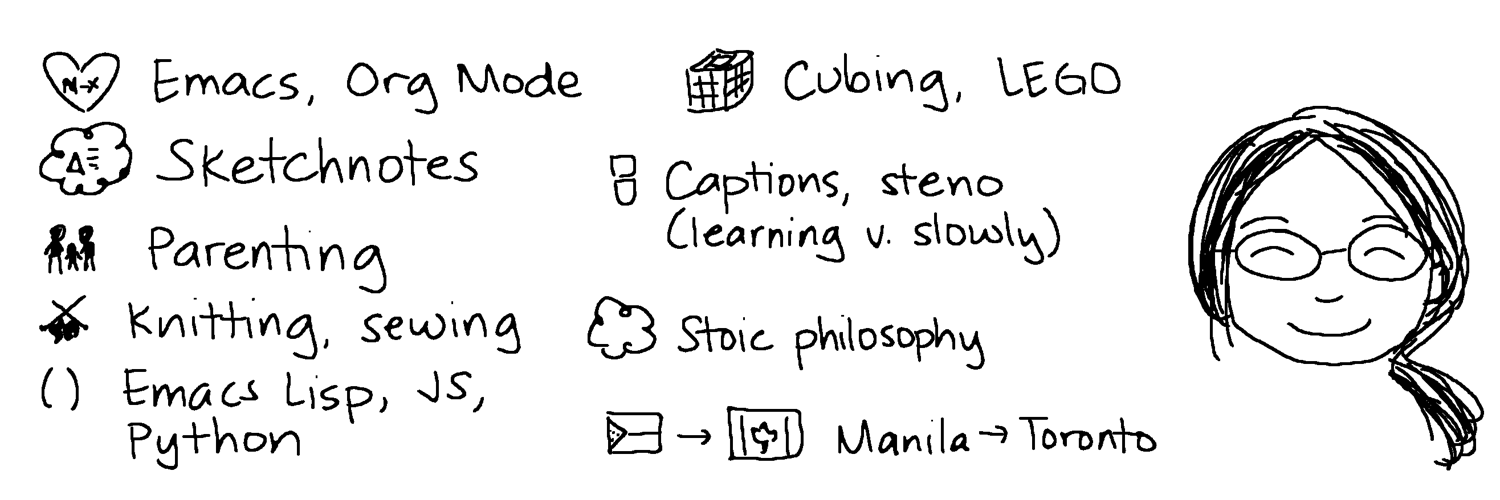

Interests include: #Emacs, #OrgMode, #elisp, #nodejs, #python, #sketchnotes, #parenting, #cooking, #gardening, #knitting, #sewing, #lego, #captioning, #plover #steno, and #stoic philosophy. Originally from Manila, now in Toronto. Married to a Vim guy (go figure) and raising a 7-year old (editor preference unknown), along with two very loud cats.

Blog: https://sachachua.com (mostly Emacs News these days), sketches: https://sketches.sachachua.com. I also maintain planet.emacslife.com and subed.el

This profile is from a federated server and may be incomplete. Browse more on the original instance.