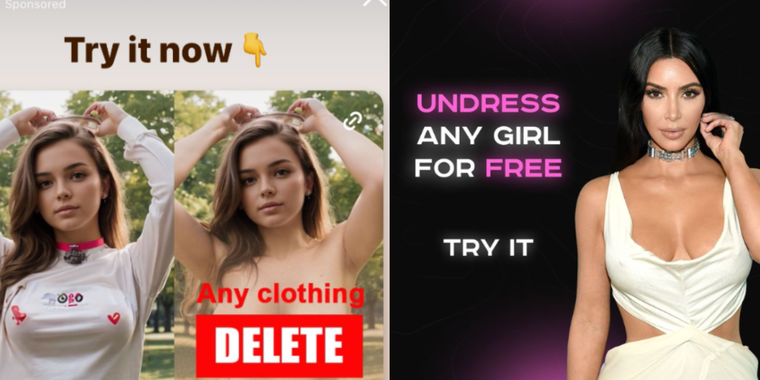

Instagram Advertises Nonconsensual AI Nude Apps

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

Add comment